Hi guys,

Since some of us have been experimenting with the model training, I thought this thread could serve as a “cutting board” to assemble best practices: confirm or debunk impressions and assumptions.

If this ferments and matures, it can be distilled to Wiki material.

Please add your remarks and ideas for conventions

amp settings

convention: all at noon unless specified

Do you guys set everything at noon?

I tweak a little and try to tune down the gain a bit, even if it concerns “high gain models”.

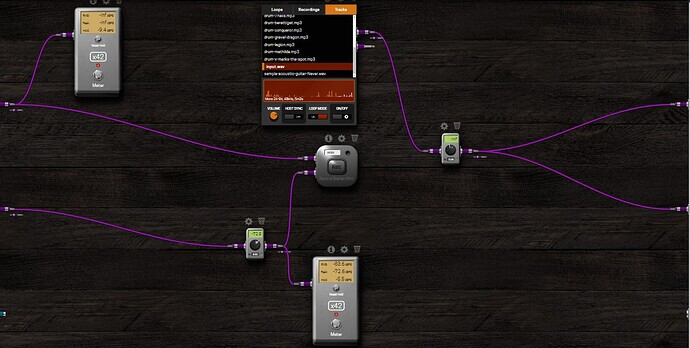

including cab IR’s or not"

convention: amp only, cab included optional extra

Adding a model + a cab IR in your chain can get processor heavy. Combinign amp and cab in one model could be a solution but I didn’t get satisfying results so far. For me the standard is “amp only” for now but I was wondering how it works for the rest.

So when it comes to the input and target file sound files, some specs need to be followed:

File size:

exact same size, down to the number of samples

What length should be optimal?

input file

convention: the input file supplied by MOD Audio

I know there is a demo file and I always use it but would something else be more suitable for lower tuning or acoustic instruments?

sample rate

convention: 48000hz

(and not the typical standard 44100 you find in many tools)

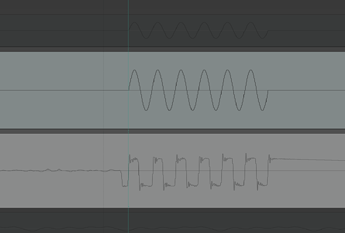

normalisation and peaks

Convention: normalised to -3db? (or-6db?)

I’ve read somewhere we have to aim for the same peak level of -6db, is that correct?

Should both files have this? What is the best way to achieve correct normalisation?

Latency when re-amping

when re-amping plugins on your computer, latency may occur. I haven’t found the best way to mitigate this yet (besides liding to match some wave shapes… which isn’t easy when doing high gain stuff)

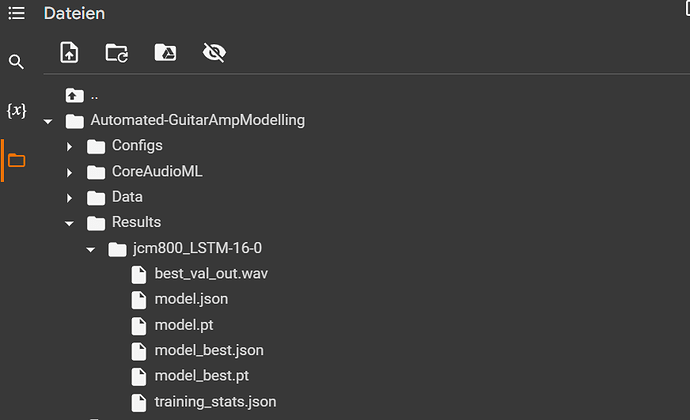

training model type

convention: at least 1 “standard”, a second should be “light” or “lighterst”?

When we train and deliver json files, should we publish a JSON for each trainign model?

(from lightest to heavy)

Who has heard significant results between trainings? For me, the light/lightest training sounds just as good as the standard.

esr results

What intervals can we label and how significant is this value?

Excellent:

Good:

Mixed succes: make sure you listen and compare very carefully before publishing

Poor:

Unusable:

output gain

How do we keep the output gain uniform across the models we provide to the community?

In a different thread I saw the addition of al ine of code to the JSON. Is that an absolute value or do you need to compare with others and deicde how much it should be? what method would be the best way to determine?