Hi @gianfranco, what’s the latest on this? Was there any branch pushed to git containing initial work on this? There have already been offers from the community to help with the development - maybe the community could even take it over if MOD is currently too busy with other stuff like the X release? This functionality could facilitate a quantum leap forward; for example the Guitarix convolver is just sitting there waiting to be used  So I hope MOD doesn’t have to be the bottleneck for getting this done, considering that there is such great enthusiasm within the community which could be harnessed

So I hope MOD doesn’t have to be the bottleneck for getting this done, considering that there is such great enthusiasm within the community which could be harnessed

There have been a lot of experiments, but we are very careful of the features we push out.

It can not be just a hacky thing though, it needs to be reliable.

Also once it is out, we have to keep supporting it. So lots of care and thinking on how new features will work.

The good news is that any sort of more advanced GUI features requires a next-level integration of UI and DSP side of things. This is not usable just for selecting and loading files, but much much more.

One of the tests we did was this magical thing:

This is an analyzer plugin, running an FFT and sending data from the DSP side to the UI.

The UI receives the custom messages and draws the FFT.

Messages the other way around is not done (from GUI to DSP, used for example to let a plugin know the user selected a file).

There is some careful planning that needs to happen here, as we need to map between LV2 objects and Javascript ones. Plus defining an API that we will be supporting for a long time.

For now it was just tests to see what could be done, and if it worked. So far so good, as you can see

At some point this will stop being a hacky thing, but how exactly it works behind the scenes needs to be well defined.

Regarding audio player, since I was out of the team for a long while, I am not sure of the status.

But I have been working on my own plugin for playing audio files, and one for playing midi files too, in my own host.

Re-purposing them for mod would be straight forward. (just need to fix a little bug about looping not always going back to the correct position)

The Duo X launch is kinda halting most of these developments though, understandably.

Once we finalize everything for the launch, the next phase will be quite exciting.

I would enjoy having these available, I have a bunch of MIDI loops and audio samples I’d love to be able to use from the device. I would pay for them in the store if you published them as “Welcome Back falkTX Special Edition” versions

Regarding FFT calculations (or Wavelet transform calculation, useful for denoising purposes), they can be done with terrific performance on hardware such as GPUs.

I a far future, would it sound completely silly to add a GPU to the Mod’s board in order to dramatically expand the horsepower ?

The GPU is already there actually, but disabled by default.

We do not have any plugins right now that make use of it, and none of MOD software needs it either.

Turning on the GPU also consumes more power and increases temperature, something to take into account.

Do you know of any existing opensource DSP code that makes use of the GPU?

A full fledged plugin or application would be even better.

I don’t know anything Open Source. But I will look into it.

I was wondering about audio/mido file playing as would be useful

Just come across this. Posting it here for reference.

hmm maybe a convolver that offloads rendering to the GPU… would have 1 cycle latency but that is not too bad, specially if it means very low cpu usage for a (usually heavy) IR reverb plugin

a quick search online leads me to this project https://github.com/gpu-fftw/gpu_fftw

which uses http://www.aholme.co.uk/GPU_FFT/Main.htm and converts it to fftw compatible calls.

Not sure if this can be used as-is (likely is hardware specific), but knowing that people have success on such tasks is quite promising.

How does one use fft for convolution over a finite number of buffer lengths ?

I was rather thinking of applications such as adaptive non-linear denoising.

isn’t that how it always works? not sure if I get the question…

I am no specialist of how convolution is implemented in processors such as the mod. But from what I believe regarding the fft, it is used for circular convolution on the full time history of the signal, from start to finish. Not shure how you adapt that for a rolling buffered process such as what Jack does. I’m happy to learn here

it works by virtue of the IR file being finite length. So for an N sample length IR you only need to keep N samples of the audio input to calculate the convolution. Each step you just rotate the input samples, multiply by the IR samples, and sum it all up. Pretty easy to apply that to a buffered process. You just keep the old samples in memory if the IR is longer than your block size.

does that make sense?

It does make sense, but I don’t see where the FFT fits in the process you describe. Although I could see a way to proceed with it now.

Inserts DSP theory:

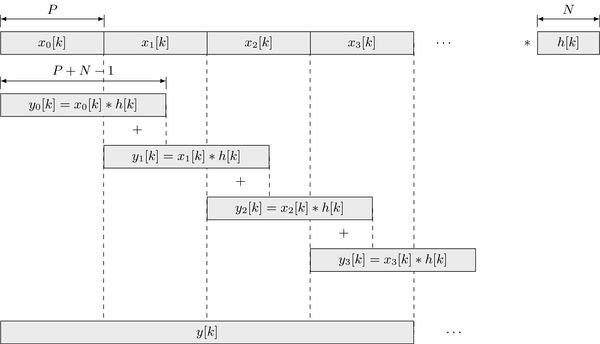

I think what ssj71 tried to explain is a form of linear convolution and made some mistakes in doing so. You need all input samples and not just N samples of audio. an IR with length M with an input array of length N will result in an output signal of length N+M where M a few times the size of N. The whole input bucket has to be stored until it “cycled” through the IR. This usually takes a couple of run cycles to do. The output bucket is of same size as the input bucket which means that overlapp add has to be applied to recreate the correct output.

Convolution can be done in either the time or frequency domain. Time domain uses circular (or cyclic) convulution. The frequency domain uses complex convolution of the real and imaginary part of both input and IR signal. The inverse fourier transform is applied to transform the convoluted signal back to the time domain.

In practice (what each company does when working with this things) is take an IR of length 2^N with 1024 or 2048 being the most commonly used. The remaining samples are generally discarted because of reasons. The input is also a power of two and is is usually 64, 128, 256, 512 etc. Overlap add has to be implemented here as well as the output size M+N as well.

For realtime audio applications FFT is almost a no-brainer. I believe FFTW uses neon which is optimized for parallel processing, which is what you need to perform efficient fft butterfly algorithms.

I suggest to watch some online videos about signal processing. There are plenty of professors who explain this topic very well. Just remind that it is NOT easy to grasp and actually do yourself.

Generally, you would add zeros untill the bucket is of size 2^N and a window is applied. (starting with a hann window as it is easy to implement)

Actually, I did some work long ago about wavelet denoising of signals, so I know FFT and convolution. What I am very new to is the ACTUAL way it is done in real life processors such as the Duo’s plugin.

In particular, calculating convolution by multiplying complex Fourier coefficients one by one results in circular convolution. Which means that beginning and end of the input sample end up being mixed up over a length of the IR’s support.

So is this portion of the signal simply being discarded and spliced with the output of the previous chunk of processing ?

Also, I suppose this way of doing thinks has quite an important impact on latency doesn’t it ?

The remainder of the output signal that does not fit in the output bucket is added to the next bucket on the following run cycle. This is the overlap add I mentioned above. The image below explains how this is done. This is actually how I made the two cab simulators for MOD a good year ago.