I just use Helix Native in Reaper.

Hi all!!

Can anyone tell me why i never reach the 500 epochs?It stops way before that and says patience validation or something like that…

Also how can i reset the code if i want to train multiple models without exciting and redoing everything??If i just replace the target.wav with another capture and click the cells again it says neural network found contiuning training and the result it like a mix from the previous capture or just the previous capture…

Thanks!

I don’t think that is a negative thing, doesn’t that mean the algorithm recognizes that it isn’t learning anything new and it doesn’t need the time to know what it’s about?

can’t really answer the second question since I always select a new folder on my drive for every new model so I start from that step again

Hi @Boukman,

the first thing is totally normal. As @LievenDV said it stops when the model is already the best version.

(should be a easier to understand prompt on our side of the training)

The second thing is as like you said: it starts the training with the previous results.

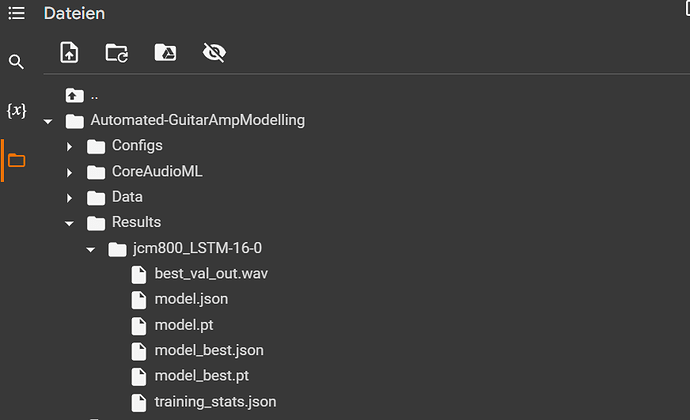

You just have to delete the folder of the models in the Results folder.

Thanks a lot!!I`ll try it!!

So, I made a few attempts at making models, but in the end, my ESR ended up somewhere between 1.000xxxx and 0.9xxxx, and from what I recall, that’s bad. Also, the best one (0.9xxxx) ended up completely silent. I think my issue was getting the input and target audio lined up perfectly. After recording, I use audacity to line up the 2 files. I line up the 2 clicks at the beginning, but by the end of the file, there appears to be some drift. So, to combat this, I decided to record both Dwarf outputs to a single stereo track. Output 1 going through the amp, then the audio interface, output 2 going straight to the audio interface. Finally, in Audacity, I split the stereo track into 2 mono tracks and save one as target.wav and one as input.wav. This solved the problem and my resulting ESR ended up at 0.0012241610093042254, which is a lot better, and sounds pretty decent. Just thought I’d post this here for anyone else facing similar issues.

Here’s the pedalboard I used to make the recording.

Should we suggest that people delete their results folder after creating a model?

(as a cleanup courtesy but also to avoid influencing their results if they do a new attempt on a model?)

no. The script should just create a new folder automatically. Easy to do for @itskais I think.

I tried deleting the file but it didn`t work…It stopped in epoch 80 and made a bad file 0,9 esr.

I think it needs a script modification…

If you’d like you could send me the input and target waves and I try it with my local script.

Thanks but i disconnected and i did it all again and it was ok.I also want to check the levels and try to bring them closer to normal guitar input.

We can add a reset function in the first cell, to delete the already trained model folders if one wants to train a new model ^^

Yes that would be great!Thanks!!

I try to make some training but after uploading the files (input, target), I can not find them anywhere. They seems to be uploaded but where are they?

(Edit) - It does not work with mozilla, runs on Chrome.

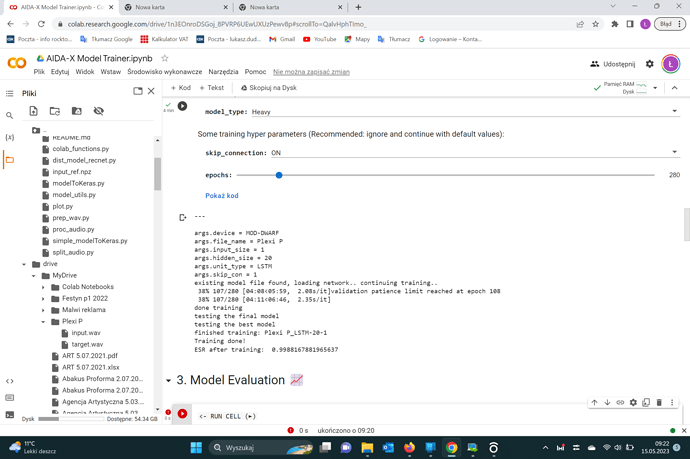

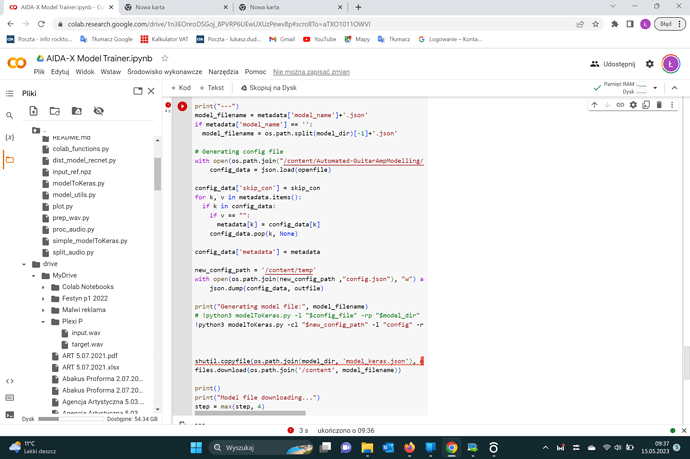

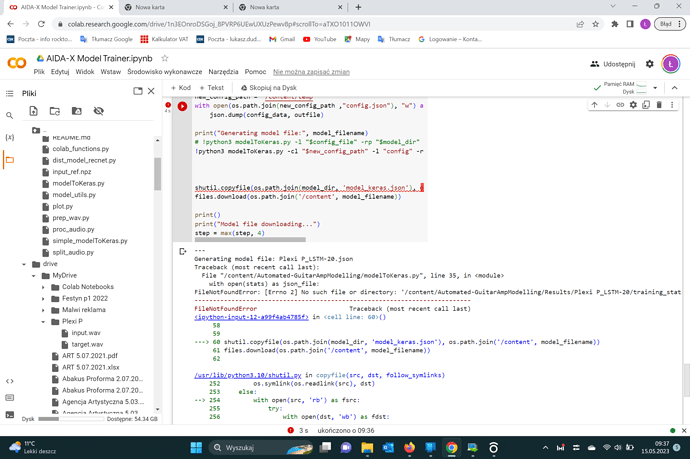

The training is done - and it seems everything is ok:

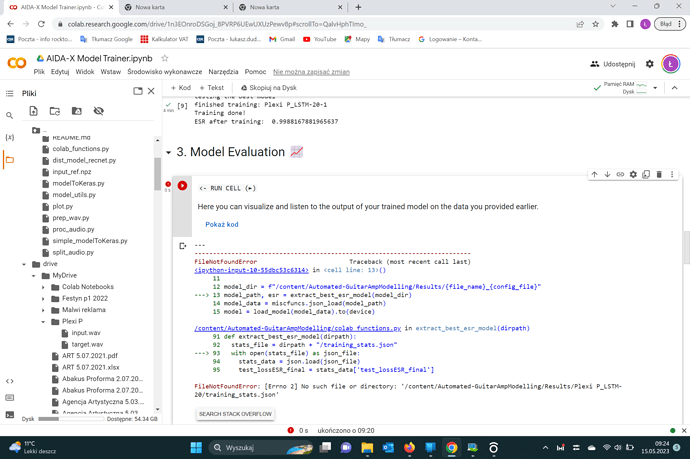

but when I go to further steps there is an error - any idea what I make wrong?

look at this thread

I’ve been really interested in doing some modelling of some pedals, just had to borrow a reamp box.

It’s only just dawned on me that I could just use a DI before the pedal and a DI after. I have a Jad Freer Capo which is already configured with a clean DI of the bass and a DI with my preamps and drives ![]()

Serves me right for not reading the guide! I’d assumed there would be a some kind of special input signal that would be used rather than just a guitar signal.

Is there a benefit to using raw guitar as an input for training rather than a specialised signal that sweeps through frequencies and volume etc?

A few uninformed guesses:

- Not everyone has equipment ready for streaming an audio file into their hardware

- A full volume and frequency sweep might produce significantly more weights/parameters to store in the model and require more CPU to run, even if you’re only using a fraction of spectrum captured in your playing.

- A volume and freq sweep doesn’t capture many of the techniques that guitar players use to alter their tone and dynamics like bends, slides, mutes, polyphony / chords, attack / picking style. These can vary greatly from player to player. If your gig is wailing on low power chords, you probably don’t need the model “cluttered” with response data for bending the high E string.

Yeah makes a lot of sense!

Going to have a play with different guitar/bass combinations and see what works best. Not needing a reamp box makes it significantly easier!

the dataset does not influence how many weights are saved and how much CPU it consumes. That is consistent with the network/configs you choose.

absolutly correct. You could even go that far and have a model of you bass sound for every specific song/type of music. Record a dataset and train it with that.